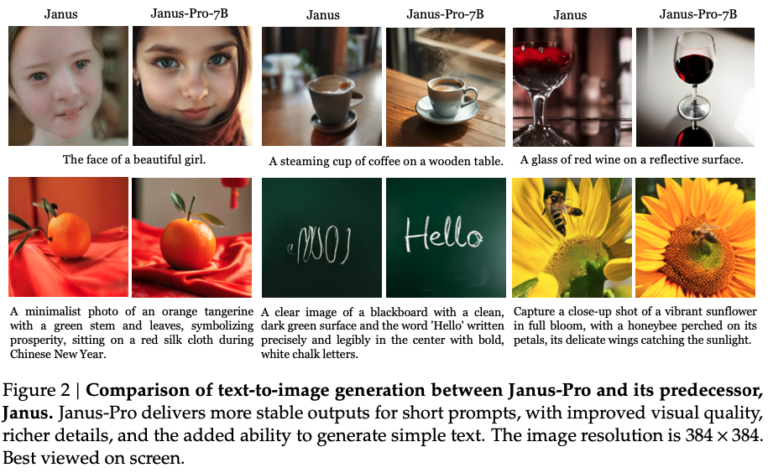

A comprehensive guide to DeepSeek, a usage technique that 90% of people don’t know (recommended for bookmarking)

A comprehensive guide to DeepSeek, a usage technique that 90% of people don’t know (recommended for bookmarking) Since DeepSeek-V3 was released a month ago, I have been updating articles and videos related to DeepSeek because I think it is a very awesome company. Until yesterday, history was finally witnessed, topping the US Apple App Store,…